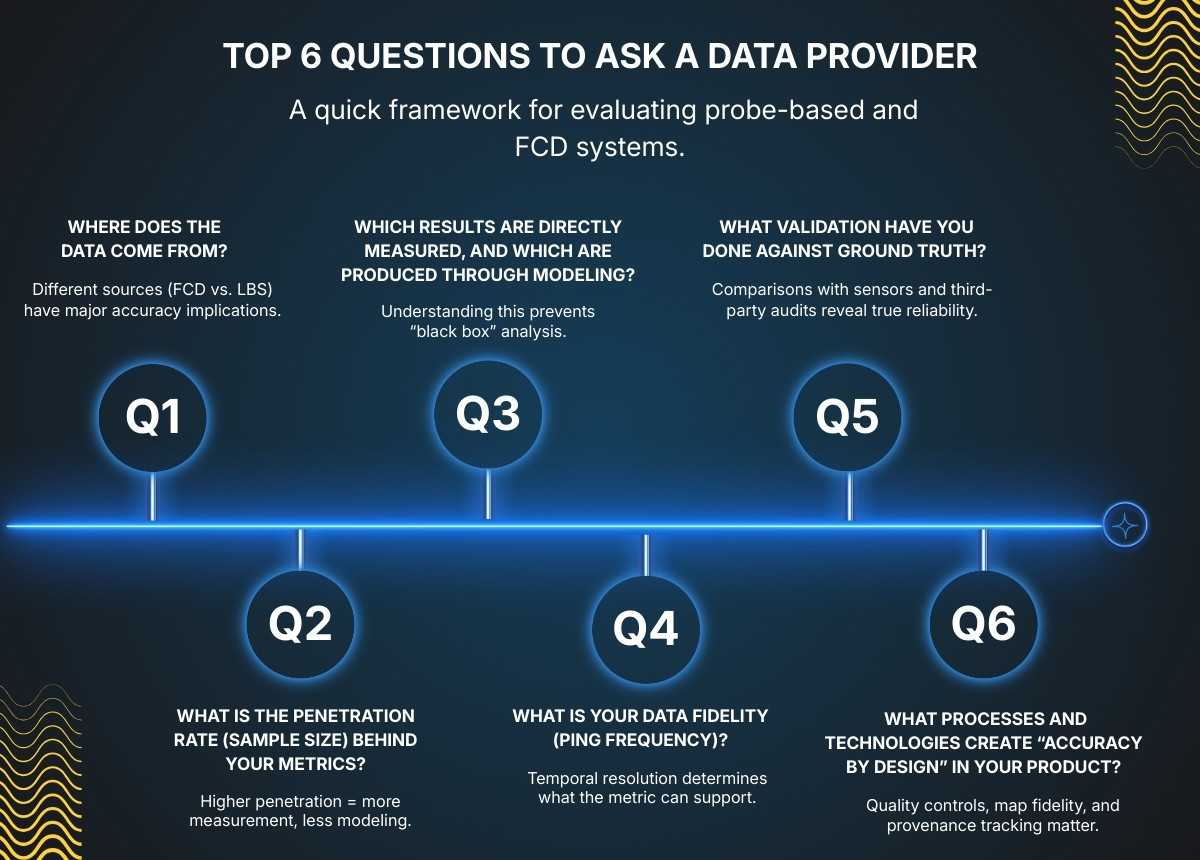

Top 6 questions to ask a data provider (and why they matter)

A practical guide to the essential questions every agency should ask to evaluate the quality, transparency, and reliability of a traffic data provider.

When traffic engineers first encounter floating car data (FCD) or other probe-based datasets, it’s natural, and necessary, to ask tough questions. These datasets can be incredibly powerful, but only if you understand how they’re built and what their limitations are.

During our recent webinar, Big Data That Delivers: Accuracy, Governance, and Agency Best Practices, panelists from leading organizations; Craig Smith Director Enterprise - Geospatial at TomTom, Jesse Coleman Manager, Transportation Data & Analytics at City of Toronto, Jesse Newberry Principal Digital Solutions Technologist at HNTB, and Matthew Konski, MASc, BEng Senior Data Solutions Engineer at Altitude by Geotab, agreed on one takeaway: traffic engineers need a clear, practical list of questions to ask when evaluating a new data provider. With so many probe-based and big-data products entering the market, knowing what to ask is essential to understanding data quality, transparency, and suitability for real-world applications.

Not all data is created equal, and not all vendors explain what happens behind the scenes.

The purpose of this article is to offer a practical starting point: a short list of essential questions that will help determine whether a dataset is reliable, transparent, and suitable for your traffic studies. These questions will help you cut through uncertainty and make defensible decisions.

1. Where does your data come from?

Understanding the underlying data source, whether in-vehicle GPS, fleet telematics, mobile apps, or LBS data, is fundamental to assessing accuracy and relevance.

For example, when selecting a data provider for an origin–destination or travel-time study, the source matters greatly. Floating Car Data (FCD) comes from true GNSS traces captured while vehicles are moving on the road network, offering high positional accuracy, consistent sampling, and reliable route reconstruction. Location-Based Services (LBS) data, by contrast, typically originates from weather, social media, food delivery, and gaming apps, producing irregular, event-driven pings that rely on lower-accuracy location methods. In this case, understanding the data source alone is enough to anticipate the accuracy and defensibility of your study’s outcomes.

2. What is the penetration rate (sample size) behind your metrics?

Penetration rate directly affects reliability. High penetration means more measurement and less modeling; low penetration means you may be looking at estimates rather than observations.

Penetration rate represents the percentage of real-world traffic that a dataset represents on a given road, within a region, or during a specific time period. It is a key indicator of how accurate big-data outputs will be for speeds, travel times, OD flows, and volume estimation.

Because penetration varies by geography, road class, day of week, time of day, and data source, the best practice when using big data for the first time in a new jurisdiction is to complete a joint penetration-rate validation. Agencies collect ground-truth volumes, and the provider supplies observed probe counts for the same locations and time periods. Comparing the two gives a clear, evidence-based understanding of local penetration rates and helps determine whether the data is fit for your intended study.

An exception exists when the provider can supply robust, independently validated penetration-rate studies that are relevant to your region or roadway context. If these studies are comprehensive, transparent, and demonstrate penetration levels that meet your requirements, they may offer sufficient confidence without requiring a full validation effort

3. Which results are directly measured, and which are produced through modeling?

Another important question to ask your provider is to clearly distinguish what metrics are directly measured versus modeled.

Many vendors combine real probe observations with statistical or machine-learning inference, and understanding the difference is critical. Providers should explain which metrics come straight from observed vehicle traces and which rely on assumptions, estimation, or modeling techniques. This transparency helps you understand confidence levels, limitations, and the appropriate use of each metric, rather than treating the dataset as a “black box.”

4. What is your data fidelity (ping frequency)?

Data fidelity, or ping frequency, determines the analytical resolution a dataset can support.

For example, a 15–30-second ping rate may be sufficient for corridor-level speed and travel-time monitoring, but intersection performance or turning movement detection often requires sub-5-second fidelity.

Understanding the actual sampling rate ensures the data is used in the right context and prevents it from being applied to analyses where the temporal resolution is insufficient.

5. What validation have you done against ground truth?

Validation is one of the strongest indicators of whether a dataset can be trusted. A robust data provider should already have completed systematic validation against real-world measurements before presenting their product to agencies. This includes:

- Comparisons with permanent counters, Bluetooth sensors, or loop detectors

- Independent third-party validation (e.g., ETC studies)

- Clear documentation of error rates and assumptions

Selecting a provider with proven, transparent validation enables agencies to adopt probe-based data more confidently, integrate it more quickly into operations, and reduce the burden of verification work internally.

6. What processes and technologies create “accuracy by design” in your product?

Beyond data sources and sample size, it’s important to understand how a provider ensures accuracy throughout their entire system. Leading vendors engineer quality and consistency into every stage of their product—not as optional add-ons, but as core design principles. Ask your provider what processes, techniques, and technologies they use to guarantee accuracy at scale.

Strong practices typically include:

- Embedding quality controls directly into ingestion pipelines

- Maintaining high geospatial fidelity and frequent map refresh rates

- Tracking provenance for every data point

- Regularly comparing outputs against ground truth measurements

Vendors that invest in these practices, such as TomTom and Altitude, build accuracy into the dataset from the moment data is collected, ensuring consistent, defensible results for traffic analysis.

Conclusion

Choosing the right data provider isn’t just about comparing features, it’s about understanding how the data is created, validated, and aligned with your operational needs. The questions you ask upfront determine how confidently you can rely on the insights later. By approaching vendors with a structured, informed checklist, agencies can build stronger partnerships and unlock far more value from their data investments.

In the end, accuracy isn’t a number, it’s a relationship. The industry is shifting from a “black box” mindset toward a collaborative ecosystem built on transparency, shared validation, open practices, and frequent communication between agencies and vendors. Accuracy is no longer just a feature of the dataset; it’s the outcome of an ongoing partnership grounded in trust and clarity.

If you’re interested in the deeper discussion, including real-world examples, agency perspectives, and insights from TomTom, Altitude by Geotab, the City of Toronto, HNTB, and SMATS, watch the full webinar recording here.